Recommended

Web Summit Vancouver; Brands in uncertain times.

Recently, the CEO of his namesake company, the Angus Reid Group, took centre stage at the Vancouver Web Summit with the Global CEO of FCB, Tyler Turnbull. On the docket? Insights from their groundbreaking joint Citizen Consumer Research Study. What started as a...

The Moderators Takeaways: Brands in Uncertain Times: The Citizen Consumer Webinar

Trust and optimism used to rise and fall with the political tides. Not anymore. Both it seems have washed out to see. Our latest research reveals that a strong majority of Canadians and Americans no longer have confidence in their system of government. Nearly half of...

Angus Reid Institute’s election projections are a statistical bullseye

As votes were counted in this week’s federal election, one thing became clear: the Angus Reid Institute’s polling program delivered one of the most accurate forecasts of the campaign—not just nationally, but provincially, too. Their final projection had the Liberals...

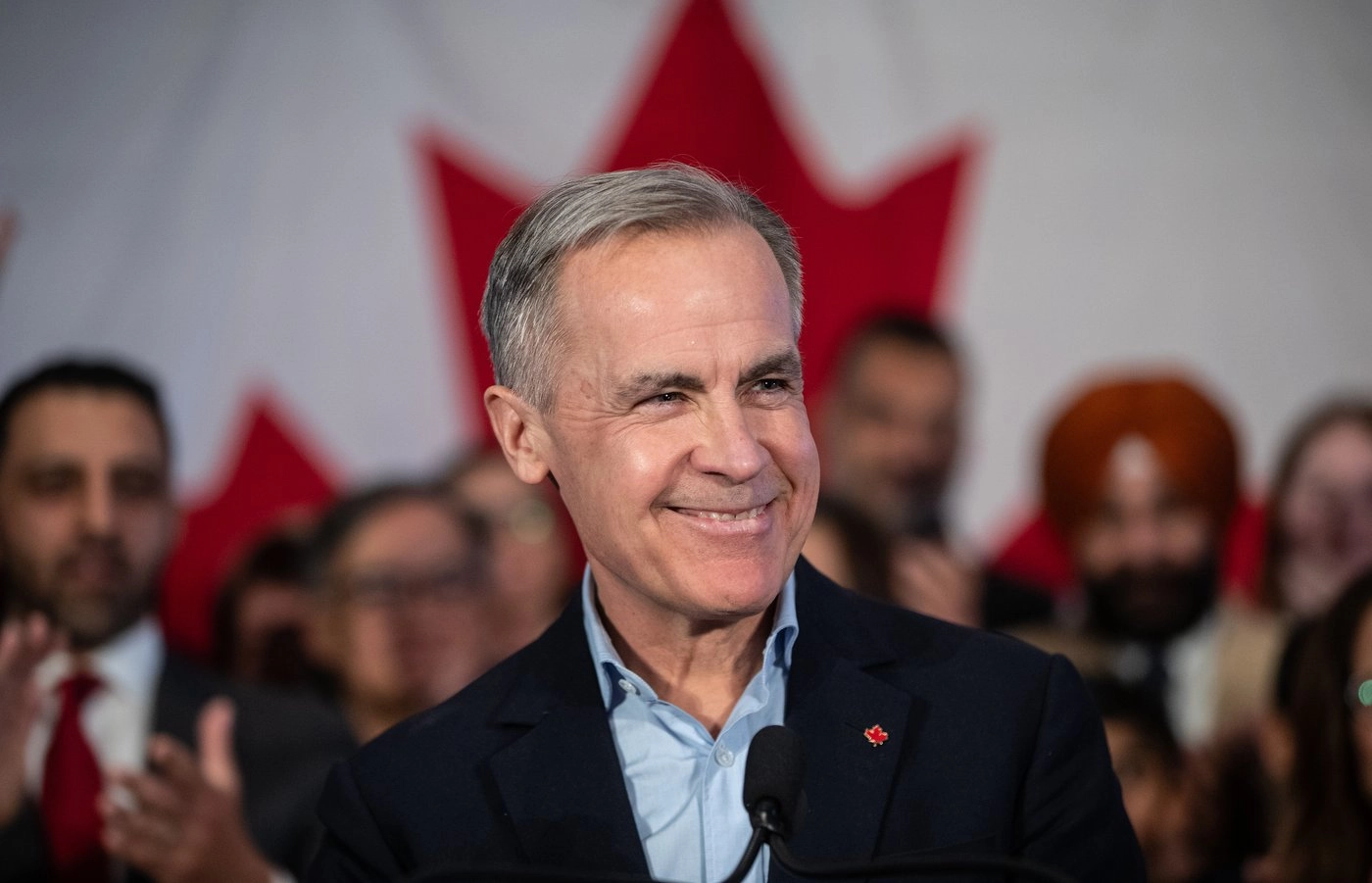

Shachi Kurl puts Canada’s new PM on notice in the New York Times

In case you missed it, Shachi Kurl—President of the Angus Reid Institute—penned a must-read piece in The New York Times this week about Canada’s surprising federal election outcome and the challenges now facing new Prime Minister Mark Carney. You can read it here: The...